All published articles of this journal are available on ScienceDirect.

Perception, Awareness, and Ethical Use of AI in Scientific Research: A Study among Healthcare Researchers in Jeddah

Abstract

Introduction

Artificial intelligence is increasingly embedded in research workflows, yet evidence on how healthcare researchers perceive and use AI in Saudi Arabia remains limited. This study assessed awareness, use, and ethical perceptions of AI among healthcare researchers in Jeddah.

Methods

We conducted a descriptive cross-sectional survey using a bilingual, expert-validated questionnaire. Non-probability convenience sampling yielded 1,379 respondents (74.9%). Descriptive statistics and chi-square t-tests examined subgroup differences by gender, education, and research experiences.

Results

Most participants recognized AI in their research tools (81.8%), while 56.7% reported active use. AI use was higher among postgraduates than bachelor’s holders (72.2% vs 54.5%; p=0.002) and among those with ≥5 years versus <5 years’ experience (70.1% vs 45.3%; p=0.005). Ethical concerns were reported by 47.6%, with higher concern among women than men (60.2% vs 42.1%; p=0.019). Perceived benefits were common: 78.0% agreed AI improved research quality, and 78.6% reported enhanced productivity. Willingness to work in AI-enabled environments reached 77.1%, contingent on safeguards for privacy, authorship, and fairness.

Discussion

Findings indicate high awareness but only moderate adoption of AI, with usage concentrated among more educated and experienced researchers, alongside notable gender differences in ethical sensitivity. These patterns suggest capability gaps that may limit responsible uptake without targeted support.

Conclusion

Institutions should embed practical AI literacy, hands-on training, and gender-responsive ethics guidance within research development programs and governance frameworks to translate AI awareness into confident, ethical AI use. Such measures aligns with national priorities and enables safe, equitable integration of AI across healthcare research settings.

1. INTRODUCTION

Artificial intelligence (AI) is rapidly transforming the landscape of scientific research. From literature reviews and data analysis to manuscript drafting and predictive modeling, AI tools are increasingly embedded into academic workflows [1, 2]. In the health sciences, AI enhances clinical decision-making, diagnostic accuracy, and research productivity, offering researchers considerable advantages in speed, precision, and scope [3, 4].

However, the integration of AI into academic research raises pressing ethical questions, including those related to data privacy, authorship integrity, intellectual property, and the potential erosion of academic rigor [5, 6]. These concerns have prompted calls for institutional guidelines and cultural safeguards to govern AI’s use, particularly in sensitive sectors like healthcare.

In Saudi Arabia, AI adoption is gaining momentum in alignment with the 2030 national vision of thedigital transformation agenda. As health research becomes increasingly digitized, it is vital to understand how local researchers perceive and use AI tools, and whether they are equipped to do so ethically and effectively. Previous studies have explored student attitudes, curriculum readiness, and healthcare worker perspectives on AI, but very few have focused on healthcare researchers themselves [7, 8].

Moreover, no large-scale studies have evaluated how demographic factors such as research experience, education level, or gender influence AI usage and ethical concerns in the Saudi academic setting. This presents a critical gap in the regional literature.

Therefore, this study aims to assess the awareness, usage, and ethical perceptions of AI among healthcare researchers in Jeddah, Saudi Arabia. The findings are expected to support the development of locally relevant AI governance policies and targeted researcher education programs.

2. LITERATURE REVIEW

As artificial intelligence (AI) becomes increasingly integrated into research environments, global and regional scholars have examined how healthcare professionals perceive and engage with this digital transformation. Existing studies have focused on three key domains: perception and attitudes, awareness and training, and ethical concerns. Each domain offers insights into the factors influencing AI adoption, yet the literature also reveals substantial gaps, particularly within the context of healthcare research in the Middle East.

2.1. Perception of Artificial Intelligence in Healthcare Research

Recent studies indicate growing optimism about AI’s potential in healthcare and academic research. In Saudi Arabia, surveys of medical and health sciences students have shown moderate readiness for AI, emphasizing cognitive awareness, technical skills, and ethical understanding [7]. A nationwide survey across 21 Saudi universities further highlighted positive attitudes toward integrating AI into medical curricula to support Vision 2030 goals [9].

Among professionals, researchers have expressed confidence in AI’s capacity to enhance diagnostic accuracy and decision-making [3]. Exposure to AI tools has also been linked to greater interest in interdisciplinary collaboration and more efficient research practices [10]. However, technological unfamiliarity has led to skepticism among researchers with limited digital experience, suggesting disparities in AI adoption based on exposure levels [11]. Notably, researchers in urban centers have reported higher enthusiasm and AI integration than their rural counterparts [12].

2.2. Awareness and Knowledge of AI Among Healthcare Professionals

Awareness and knowledge levels of AI vary widely across institutions and the roles of health care professionals. A systematic review of AI in Saudi medical education identified a lack of structured curricula and called for formal AI training at both undergraduate and postgraduate levels [8]. In Northern Saudi Arabia, a cross-sectional study found low to moderate AI-related knowledge among health science students, with formal training shown to improve competency levels significantly [13].

Faculty members and research staff also demonstrate limited understanding of AI applications. In one study, both students and faculty lacked practical exposure to AI, which had hindered the meaningful integration of AI into health research [12]. Conversely, workshops and hands-on sessions have proven effective in improving researchers' familiarity, confidence, and ethical literacy when using AI [14]. Studies have also shown that the availability of multilingual AI tools enhances accessibility and understanding among Saudi researchers from diverse backgrounds [15].

2.3. Ethical Considerations in the Use of AI

The ethical use of AI in research has generated global concern. Key issues include data privacy, algorithmic bias, and the lack of transparency in AI-generated outputs [6]. A Saudi-based study stressed the need for local governance mechanisms to regulate AI in healthcare, particularly with respect to patient data and consent [16]. Another regional analysis emphasized the importance of culturally sensitive ethics frameworks tailored to Muslim-majority contexts [13].

Gender-specific patterns in ethical sensitivity have also emerged. Female researchers, in particular, have voiced concerns about fairness, academic authorship, and intellectual property protection [5]. Calls for collaboration between ethics committees, institutional review boards, and AI developers have intensified, with the aim of building trust and ensuring responsible AI use in health research environments [6].

2.4. Synthesis and Research Gap

In summary, while recent studies across Saudi Arabia and globally have highlighted a growing interest in AI within health-related fields, they have largely focused on students, clinicians, or educators. Very few studies examine the perceptions and ethical concerns of healthcare researchers themselves—particularly in large-scale, with demographically stratified samples. Furthermore, there is limited empirical exploration of how gender, educational background, and research experience influence the adoption and ethical stance toward AI. This study addresses that gap by providing evidence from 1,379 healthcare researchers in Jeddah, offering a contextualized understanding of AI usage and ethical awareness within Saudi Arabia’s academic research sector.

3. METHODS

3.1. Study Design

This study employed a descriptive cross-sectional design to investigate the awareness, perception, and ethical concerns related to artificial intelligence (AI) among healthcare researchers in Jeddah, Saudi Arabia. The study adhered to STROBE reporting guidelines for observational research. A total of 1,379 healthcare researchers in various academic, institutional, and hospital-based settings across the public and private sectors participated in this study, and the overall response rate was 74.9%. The non-probability convenience sampling technique was used for the study .

3.2. Setting and Duration

The research was conducted in Jeddah, Saudi Arabia, from January to August 2024. Participants were drawn from academic, hospital-based, and institutional research environments across public and private sectors.

3.3. Participants and Eligibility Criteria

Eligible participants were healthcare researchers and affiliated research staff with at least one year of experience in biomedical, clinical, or public health research. Individuals with no formal research experience or those working outside healthcare-related disciplines were excluded. Participation was voluntary and based on informed consent.

3.4. Sample Size Determination

A total of 1,379 individuals completed the survey, yielding a response rate of 74.9%. The sample size exceeded the minimum required to detect subgroup differences based on gender, education, and research experience with a confidence level of 95% and a power of 0.80, assuming a moderate effect size.

3.5. Sampling Technique

A non-probability convenience sampling strategy was used. Participants were recruited through institutional mailing lists, academic networks, and professional forums. Although this method may introduce selection bias, the large and diverse sample enhances the relevance of the findings to the local research context.

3.6. Instrument and Data Collection

Data were collected using a bilingual (Arabic-English), self-administered online questionnaire. The instrument was developed based on a literature review and validated by a panel of experts in AI and medical research ethics. It included five sections: (1) demographics; (2) AI awareness; (3) AI usage in research; (4) perceptions of AI’s role in scientific work; and (5) ethical concerns. Most items used a five-point Likert scale. A pilot test with 50 participants was conducted to refine clarity and content; their responses were excluded from the final analysis. Cronbach’s alpha indicated good internal consistency (awareness: α = 0.81; perception: α = 0.79).

3.7. Statistical Analysis

Data were analyzed using IBM SPSS Statistics version 26. Descriptive statistics (frequencies, percentages, means, and standard deviations) were used for all variables. Inferential analyses included chi-square tests for categorical variables and independent t-tests for continuous variables. The Kolmogorov–Smirnov test confirmed normality of distribution. Statistical significance was set at p < 0.05.

3.8. Ethical Considerations

The study was approved by the Institutional Review Board (IRB) in Jeddah (Approval No. KSA: H-02-J-002, dated September 9, 2024). All participants provided informed consent prior to participation. Data were anonymized, and access was restricted to the principal investigators to maintain confidentiality.

4. RESULTS

4.1. Participant Characteristics

A total of 1,379 healthcare researchers and affiliated staff from various institutional settings in Jeddah, Saudi Arabia, participated in the study. The mean age of participants was 36.9 years (SD ± 8.5), with female respondents representing 60.8% (n = 838) of the sample. Regarding academic attainment, 73.7% (n = 1,016) held postgraduate degrees, and 62.6% (n = 863) had at least five years of research experience. Detailed demographic characteristics are summarized in Table 1.

| Variable | Number / Percentage / Mean ± SD |

|---|---|

| Age (mean ± SD) | 36.9 ± 8.5 |

| Gender: Female | 838 (60.8%) |

| Gender: Male | 541 (39.2%) |

| Education: Postgraduate | 1,016 (73.7%) |

| Education: Bachelor | 363 (26.3%) |

| Experience ≥ 5 years | 863 (62.6%) |

| Experience < 5 years | 516 (37.4%) |

| Variable | Number / Percentage |

|---|---|

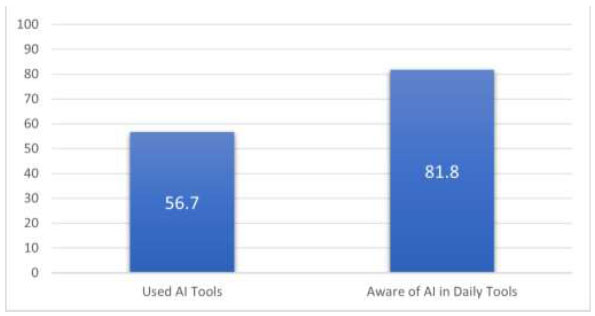

| Used AI tools in research | 782 (56.7%) |

| Aware AI is used in tools daily | 1,127 (81.8%) |

| Used AI by ≥5 yrs experience | 70.1% |

| Used AI by <5 yrs experience | 45.3% |

| Postgraduates using AI | 72.2% |

| Bachelors using AI | 54.5% |

Awareness and usage of AI tools.

Proportion of participants reporting AI usage and daily recognition in research tools.

4.2. Awareness and Usage of Artificial Intelligence

Most participants (81.8%, n = 1,127) recognized that AI tools were embedded in their daily research software and platforms. However, only 56.7% (n = 782) reported actively using AI in their scientific work. Participants with ≥5 years of experience had significantly higher AI usage (70.1%) compared to those with <5 years (45.3%) (p = 0.005). Similarly, postgraduate participants reported greater AI use (72.2%) than bachelor's degree holders (54.5%) (p = 0.002). There was no significant difference in usage based on gender (p = 0.112). These patterns are summarized in Table 2 and illustrated in Fig. (1).

4.3. Perception of AI in Research

The majority of respondents viewed AI favorably. Specifically, 78.0% (n = 1,075) believed AI improved research quality, and 78.6% (n = 1,084) felt it enhanced their personal research productivity. More than half (52.9%, n = 729) reported that AI assisted them in addressing complex research questions, including literature searching and hypothesis generation. However, 53.3% (n = 734) believed AI posed risks to intellectual property rights. Ethical concerns were expressed by 47.6% (n = 657), with significantly more concerns reported among female respondents (60.2%) compared to males (42.1%) (p = 0.019). These findings are summarized in Table 3.

| Statement | Number / Percentage |

|---|---|

| AI improves research quality | 1,075 (78.0%) |

| AI enhances individual research skills | 1,084 (78.6%) |

| AI helps answer queries | 729 (52.9%) |

| AI risks intellectual property loss | 734 (53.3%) |

| Concerned about ethical issues | 657 (47.6%) |

| Subgroup Comparison | Result | p-value | Subgroup Comparison |

|---|---|---|---|

| Experience (≥5 vs <5 years) | Higher usage among ≥5 yrs (70.1% vs 45.3%) | 0.005 | Experience (≥5 vs <5 years) |

| Education (Postgrad vs Bachelor) | Higher usage among Postgrads (72.2% vs 54.5%) | 0.002 | Education (Postgrad vs Bachelor) |

| Gender (Female vs Male, Ethical concern) | Female: 60.2%, Male: 42.1% | 0.019 | Gender (Female vs Male, Ethical concern) |

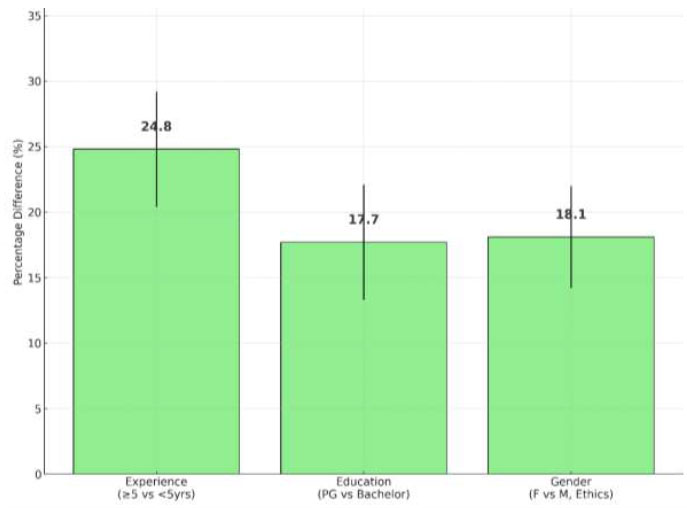

Subgroup differences in AI usage and ethical concerns (with 95% confidence interval error bars).

4.4. Preferences for AI Integration

When asked about their preferences, 77.1% (n = 1,063) indicated willingness to work in AI-supported research environments provided ethical safeguards were in place. This preference was especially strong among postgraduate researchers (82.1%) and those with ≥5 years of experience (81.3%), reinforcing the association between higher training, greater experience, and openness to AI.

4.5. Subgroup Differences in AI Usage and Ethics

Subgroup analyses confirmed that both academic level and research experience were significantly associated with higher AI usage and more favorable perceptions. In contrast, ethical sensitivity—particularly regarding data privacy and fairness—was more prominent among women and postgraduate researchers. These patterns are displayed in Table 4 and visualized in Fig. (2).

Bars represent the percentage difference in AI usage or ethical concern between demographic subgroups. Error bars indicate 95% confidence intervals.

Further subgroup analysis revealed that AI usage and ethical concerns varied across key demographic characteristics. Female researchers reported higher ethical concern levels compared to males (60.2% vs. 42.1%, p = 0.019), while AI usage was more common among postgraduates than bachelor’s degree holders (72.2% vs. 54.5%, p = 0.002). Respondents with five or more years of research experience also demonstrated greater AI usage than those with less experience (70.1% vs. 45.3%, p = 0.005). These comparisons are summarized for clarity in Table 5.

| Demographic Variable | Group Comparison | AI Usage (%) | Ethical Concern (%) | p-value |

|---|---|---|---|---|

| Gender | Female vs. Male | 59.7% (CI: 56.1–63.3%) vs. 53.1% (CI: 49.5–56.7%) | 60.2 vs. 42.1 | 0.019 |

| Education | Postgraduate vs. Bachelor | 72.2% (CI: 67.8–76.6%) vs. 54.5% (CI: 49.8–59.2%) | Not reported | 0.002 |

| Experience | ≥5 years vs. <5 years | 70.1% (CI: 65.7–74.5%) vs. 45.3% (CI: 41.0–49.6%) | Not reported | 0.005 |

5. DISCUSSION

This study provides timely insights into how healthcare researchers in Jeddah, Saudi Arabia, perceive and engage with artificial intelligence (AI) in the context of scientific research. The results indicate high awareness (81.8%) but moderate active usage (56.7%) of AI tools among participants. AI adoption was significantly associated with both higher academic qualifications and greater research experience, while ethical concerns—reported by nearly half of respondents—were more frequently expressed by female researchers.

These findings mirror international trends where early-career professionals often report limited AI use due to a lack of training or confidence [17, 18]. In the Saudi context, our results align with recent student-focused surveys showing a readiness gap in AI competency and ethical preparedness [7, 8]. The present study, however, extends that knowledge to practicing researchers and faculty, making it one of the few large-scale, region-specific investigations in this domain.

The positive correlation between postgraduate education and AI engagement suggests that exposure to advanced research methodologies facilitates comfort with AI tools. Similarly, the association between experience and usage underscores the role of professional maturity in embracing emerging technologies. These observations support the Technology Acceptance Model, which emphasizes perceived usefulness and self-efficacy as predictors of technology adoption [19].

Ethical sensitivity emerged as a key differentiator, particularly among female respondents. This finding is consistent with prior literature suggesting gender-based variation in perceptions of risk and responsibility in digital research environments [11, 14]. It also highlights the need for gender-responsive AI ethics education and inclusive policy design.

Gender, education level, and research experience have been identified as key factors influencing AI use and ethical considerations. Female researchers showed greater ethical sensitivity; researchers with postgraduate education and more years of research experience reported higher levels of AI use. These results highlight the need for a tailored approach that acknowledges the participation of different demographic groups in AI training and ethical support.

Despite notable support for AI integration, respondents were clear in their desire for institutional safeguards. Nearly 77% expressed willingness to work in AI-enabled environments, provided that ethical policies and privacy protections were in place. This demonstrates a pragmatic openness to innovation tempered by responsible research values.

These findings carry important implications for institutions, policymakers, and research funders. Universities and research centers should integrate AI literacy, ethical training, and tool-specific workshops into postgraduate curricula and continuing education programs. Ethics review boards should also develop AI-specific guidelines to address emerging challenges related to authorship, data use, and transparency.

In addition, policy frameworks must consider demographic differences in AI readiness. For example, targeted mentorship for early-career researchers and greater access to multilingual AI platforms could bridge gaps in confidence and capability. Aligning such initiatives with Saudi Arabia’s Vision 2030 digital transformation goals will be key to sustainable progress.

6. LIMITATIONS

This study has several limitations. The use of non-probability sampling may affect generalizability beyond Jeddah. As a cross-sectional design, it cannot establish causal relationships or capture changes over time. Self-reported data may also be subject to social desirability bias, particularly regarding ethical concerns and AI usage. Lastly, while the questionnaire was validated, the closed-ended items limited deeper qualitative insights.

CONCLUSION AND RECOMMENDATIONS

This study demonstrates that healthcare researchers in Jeddah are highly aware of artificial intelligence in their research environments, yet only a moderate proportion actively utilize these tools. AI usage was significantly associated with higher academic qualifications and greater research experience, while ethical concerns—particularly around intellectual property, data transparency, and fairness—were more prevalent among female participants. These patterns suggest both an enthusiasm for AI integration and a cautious stance toward its unregulated use.

Institutions must respond by embedding AI literacy, ethics training, and data governance into research training frameworks. Such efforts can bridge the gap between awareness and confident use, particularly for early-career professionals and researchers with limited exposure. Ethical oversight bodies should also consider gender-sensitive approaches to AI policy development, given the differing concerns observed.

Demographic differences in AI usage and ethical concerns further highlight the need for targeted support strategies based on experience level, gender, and educational background.

The findings support the development of national strategies aligned with Saudi Arabia’s Vision 2030, ensuring that AI adoption in research settings is both effective and equitable. Future studies should include qualitative components to explore researchers’ lived experiences with AI and expand the analysis to diverse geographic and disciplinary contexts.

AUTHORS' CONTRIBUTIONS

The authors confirm their contribution to the paper as follows: R.S.B.: Served as the principal investigator and was responsible for the overall conceptualization of the study, development of the research framework, and coordination of the study design; W.A.B. and S.M.A.: Played key roles in data management and performed the statistical analyses;. N.A.Q.: Provided critical revisions, offered expert guidance on the manuscript's structure, and ensured the academic rigor of the final version;. All authors collaboratively reviewed, edited, and approved the final manuscript for submission.

LIST OF ABBREVIATIONS

| AI | = Artificial Intelligence |

| IRB | = Institutional Review Board |

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

The study was approved by the institutional review board (IRB) in Jeddah (Approval No. KSA: H-02-J-002, Dated September 9, 2024).

HUMAN AND ANIMAL RIGHTS

All procedures performed in studies involving human participants were in accordance with the ethical standards of institutional and/or research committee and with the 1975 Declaration of Helsinki, as revised in 2013.

AVAILABILITY OF DATA AND MATERIAL

The data supporting the findings of this study are available from the corresponding author [R.S.B] upon reasonable request.

ACKNOWLEDGEMENTS

The authors extend their sincere appreciation to Dr. Muhammad Abdul Samad for his intellectual contributions and academic mentorship throughout the development of this research project. His guidance significantly enriched the study's design and interpretation. We also wish to acknowledge, with gratitude, the valuable time, participation, and insights shared by all healthcare researchers and research staff who completed the survey. Their cooperation was essential to the success of this study.